Today I am going to show a simple perl code to analyze NS2 trace file

as an example of AODV routing protocol. As you know when you run

simulation, NS2 generates a trace file like sometrace.tr. It will give a

lot of information about your simulation result. Not knowing how to

analyze this file it is useless to run NS2 simulator. In this topic we

will learn how to compute delivery ratio and message overhead.

First go to your

home directory and create

bin directory there. We will create trace file here so that we can access it from anywhere we want.

Download

analyze.pl

file, which is attached to the post, to the bin directory. I will

explain main points of the code. Following code opens a file to write

simulation results.

$ofile="simulation_result.csv"; |

open OUT, ">$ofile" or die "$0 cannot open output file $ofile: $!"; |

Usually

in trace file each line is started with some letter like r, s, D, N.

Each of the letters has meaning. For detailed meaning of the letter

refer to the

NS Manual Page

. And following perl code extracts lines which start with "s", which

means sent packets. It maybe : control packets (AODV), data packets

(cbr). We are only interested in packets those are sent by routers

(RTR). If you enable MAC trace, the packets sent or received by MAC

layer is also shown.

REQUEST - AODV Route Request (RREQ) packets

REPLY - AODV Route Reply (RREP) packets;

AGT - Packets those are sent by agent such as cbr, udp, tcp;

And following code counts packet received by each function.

Finally packets which are dropped are counted using following code :

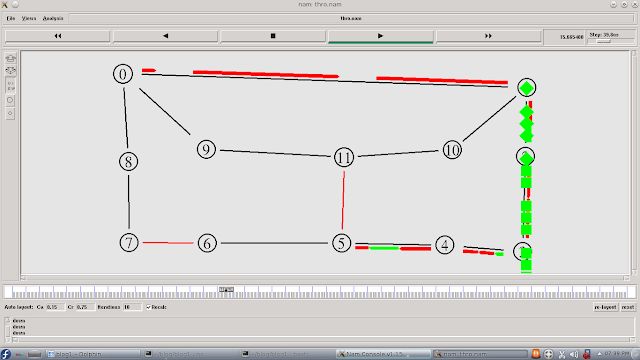

Now we will analyze example file. In

this post

I have written about simulating WSN with AODV protocol, download it and

do following. ( I am assuming you have already put analyze.pl file into

your bin directory). Here is full source code to the analyze file :

analyze.pl. More trace analyzer code is available in the

this archive.

cat trace-aodv-802-15-4.tr | analyze.pl